Virtual Data Lakes

This website is where you can find information about Virtual Data Lakes. At the moment, it just has some information about basic concepts and the Web API. Soon it will have more detailed information, plus resources that will help you use open source virtual data lakes in your solutions.

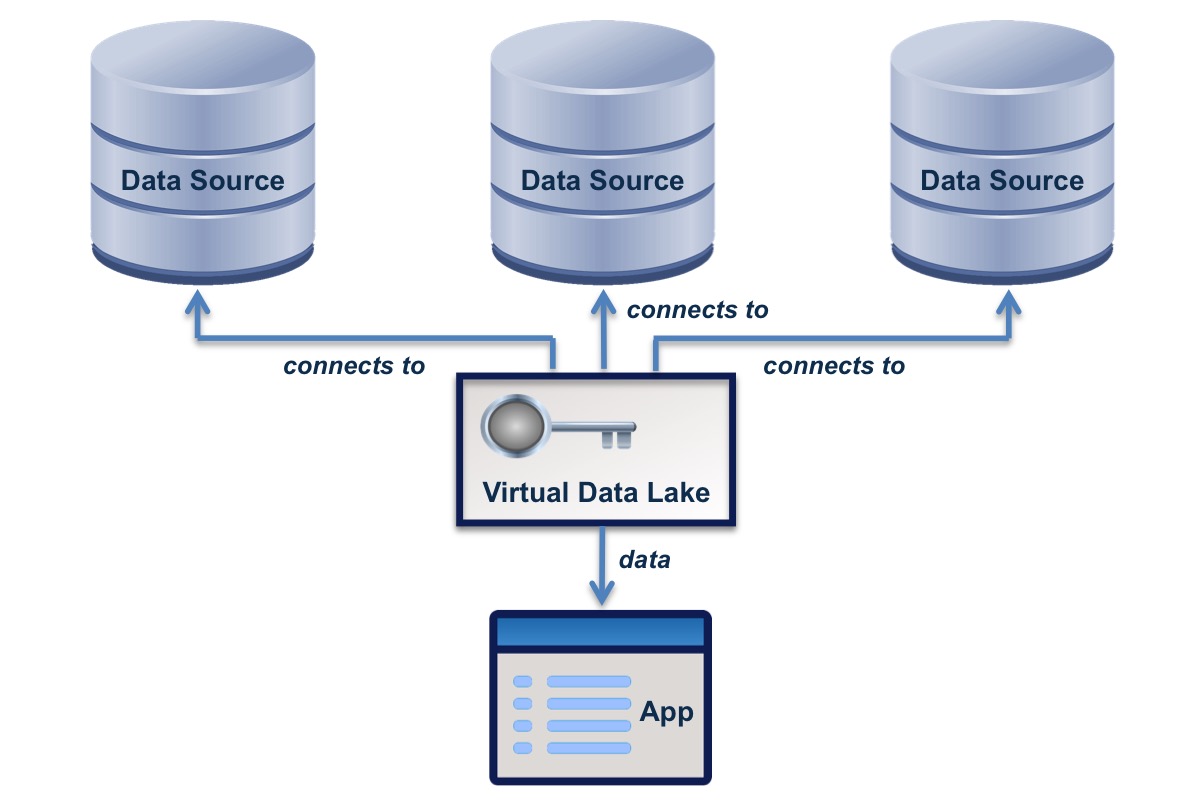

Virtual Data Lakes are a different kind of data platform that enable applications to mix and match data from different sources, applying distributed access control to ensure the right people have the right data. They provide a form of data virtualization, are key building blocks for data-centered architecture, and an ideal platform for data integration.

Virtual Data Lakes are a different kind of data platform that enable applications to mix and match data from different sources, applying distributed access control to ensure the right people have the right data. They provide a form of data virtualization, are key building blocks for data-centered architecture, and an ideal platform for data integration.

The core virtual data lake implementation has been stable for several years. It supports a number of live websites, including this one.

It has an API for use by data integration, analysis and transformation applications. Natural language processing (NLP) is a particular area of interest, and this site is available for use by collaborative open-source NLP projects.

This site is produced by Lacibus Ltd. This is where we publish the non-commercial side of our work, including open source development and standards activities. The commercial side of our work is described at Lacibus.com.

Please contact us to find out more about virtual data lakes and how to use them.